Here is a short, fictionalised, autobiographical play I wrote. All of the parts are played by me.

Business Analyst: We need to transfer files from place to place, we need a progress bar to tell us how much is complete and an estimate of how long to go.

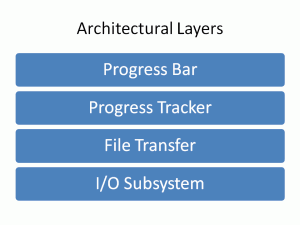

Programmer: Okay, let’s have a layered architecture.

At the bottom is the built-in I/O subsystem, that takes packets and transfers them.

Above that is File Transfer layer, which takes care of breaking the file into packets, and sending them down to the I/O subsystem. It notifies its client with a message for every 256 KB transfer completed.

Above that is the Progress Tracker layer. It monitors the messages, and estimates how long there is to go. Estimation is a black-art. We may need to try several different strategies here until we find one we can trust.

Finally, at the top is a simple Progress Bar, that uses the estimates to display to the user.

Unit Test Advocate: Make sure you have an automated test suite that can be run at any time to convince everyone it is working.

Programmer: Okay, good idea. Let’s get started. First, I will build the File Transfer layer. Easy! Now in order to test it, I need to be able to simulate various transfer success and failure scenarios. Looks like I need to write a I/O subsystem simulator. Not quite so easy, but now it is done. Now, I have automated testing, and I trust my File Transfer layer.

Next, the Progress Tracking layer. I am going to use quadrilateral wavelet theory to perform the estimates. It was tricky, but it is done. I can use the I/O simulator to stimulate the File Transfer layer to test all the different scenarios in the Progress Tracking layer. Testing is a little painful, because there is a lot of working out of quadrilateral wavelet theory by hand, but done!

Now, the Progress Bar. This is fairly straightforward to write. There aren’t many test cases, but so let’s plonk it on top of the Progress Tracking layer. The test cases I have cover most of the code paths, and just a little bit more hand-computed quadrilateral wavelet theory, and I can cover the rest. The same computations can be used to throw in a few more cheap tests of the Progress Tracking layer. Done!

Unit Test Advocate: It took a while to develop those unit-tests, but now you can be confident it works.

Programmer: Yes, they are beautiful. I am so happy.

Business Analyst: Users are reporting that the progress bar sometimes goes backwards. Also, when it says there is an hour left to go, they leave for an hour and they come back and it isn’t done. They hate that.

Programmer: That’s not a coding bug; that is consistent with quadrilateral wavelet theory. It tends to underestimate. Let’s go with monopolar superpositioning theory. It tends to overestimate, which will make people less annoyed.

It’ll take me a while to implement that, and I need to maintain the old version in the meantime. I am going to create a parallel implementation of the Progress Tracking layer with a different name. When it is ready, I will just swap over the Progress Bar to import the new layer rather than the old one.

Whew! That was hard work. But it is done, and I have unit-tested it, with a lot more calculations by hand.

Now I can delete the Quadrilateral Wavelet implementation. Wait! My unit-tests for the Progress Bar depends on the exact results from the Quadrilateral Wavelet implementation. They won’t work with the results from the new implementation.

Oh well. Rather than delete it, I will just leave it in place and treat it simulator test code. The live code will use the Monopolar Superpositioning version of the Progress Tracking later. That is unit-tested. It will also use the Progress Bar (which is unit-tested with a simulator that happens to generate its results using some weird formula that doesn’t matter anymore). The API is simple enough, there were no changes to the Progress Bar required. There should be no integration problems. Look, there wasn’t. Perfect.

Business Analyst: Hey, I have a quick request. Users are reporting that the progress bar isn’t updated frequently enough. Change it to update every 1 KB transferred.

Programmer: NOOOO!

Business Analyst: What? That’s a simple enough change. It is one constant in the File Transfer layer. Shouldn’t take long at all. You have lots of unit-tests, so changes should be cheap and safe.

Programmer: But when I change the constant in the File Transfer layer, I also have to change the test cases for the File Transfer layer. Which is fine – that’s the accepted cost of writing unit-tests.

Then I have to change the equivalent constant in the formula used by Monopolar Superpositioning layer. And then I have to rework out all the Progress Layer test cases by hand, with the new numbers.

But, worse! Then I need to change the Quadrilateral Wavelet code – that I can barely remember and isn’t used in production code. And then fix its test-cases. And then recalculate all the test-cases I need to test the Progress Bar. This will take days.

That’s a lot of work to maintain the tests for the Progress Bar – a component that isn’t being touched and is already unit-tested and trusted, when the customers are calling for an unrelated new feature in the File Transfer Layer.

Maybe I could just throw-away the Progress Bar unit tests?

Unit Test Advocate: DON’T YOU DARE! One day, you will need to refactor it, and so you should maintain a working set of test cases until then. So, write a simulator for the Progress Layer to test the Progress Bar.

Programmer: The Progress Bar just imports the lower layer. I can’t just pass a new value in during the test.

Unit Test Advocate: So, your code isn’t made to be easy to test! Rewrite it to be parameterised by Progress Layer, so you can substitute a mock sub-layer.

Programmer: That’s a lot of refactoring. These unit-tests are starting to cost me a lot of time.

Unit Test Advocate: Refactoring is easy, if you have a good set of unit tests.

Programmer: Fuck off.

Julian: Bugger this for a joke. I have working code; I just can’t prove it. I am off to write a blog article.

Comment by Sunny Kalsi on February 9, 2011

Yeah… see… don’t do that!

Haven’t you heard of mock objects? Surely there’s a pymock…

Comment by Anonymous on February 9, 2011

Hey, I read the whole article, from top to bottom. I can relate to this. Sometimes customers are nuisance. They keep on requesting things without them thinking about schedules and how it impact to programmers.

tsk!

Comment by Julian on February 9, 2011

Sunny, you said:

but I heard:

PyMock exists as another way of creating a mock sub-layer, but that doesn’t mean it can be subsituted in without modifying the code under test to accept a parameter – a new sub-layer to call, rather than being hard-coded to always call the same one.

Comment by Julian on February 9, 2011

Anonymous,

Sarcasm? Poor comprehension? Spam? I am afraid I am confused by your response. I removed the link(s) to your blog for fear it was spam; sorry if that was a poor assessment.

If the lesson you took from this is that the customers are at fault, then maybe you need to read this top to bottom again.

Comment by Sunny Kalsi on February 9, 2011

OK I’m confused by what you wrote. Let me (hopefully re-)write it in my own words:

Each layer in the architecture should have an interface. That is, you should be able to call each layer below independent of implementation of the layers. So I’m going to use an example of the progress-bar – progress tracker interface (excuse the pseudo-code):

Interface ProgressTracker

{

getProgress()

}

Mock mock

ProgressTracker pt = mock.mock(ProgressTracker)

ProgressBar toTest(pt)

mock.expects(getProgress()).return(5);

checkEquals(5, pt.checkProgress()); // Or whatever

Then each layer is tested independently, with the exception of, say, the IO subsystem. Hence being a unit test.

Comment by Alastair on February 9, 2011

Too often I have conversations like this. Over-testing is very common, and sometimes puts people off unit testing altogether. It’s also a great way to add technical debt, as seems to be happening in the above dialogue.

Your Unit Test Advocate seems to have forgotten that unit tests should be written against required behaviour, not against a specific implementation. In the same way that a good Business Analyst specifies her requirements, the unit test should also test what the code should do, but not how.

Your programmer should have known that the Quadrilateral Wavelet algorithm was but one of many possible ways of estimating the time remaining, and designed their classes – and tests – accordingly.

Progress should never go backwards – that’s a unit test case because it’s a requirement.

It should be exactly X% complete after y bytes and z seconds – that’s not a unit test case because it’s not a requirement.

Maybe you want to be more strict on the requirements around the scenario that the first 20% of bytes are transferred in x seconds but then it takes 4x seconds to transfer the next 20%. What would the expected behaviour be at this point? The estimate should probably be revised downwards to reflect the lower transfer rate, and you can probably write a test case for that. But lower by how much? There is no requirement; it is an estimate after all. Hence no test case, and we can revise the algorithm at will.

Sunny: this has nothing to do with mocks, it’s purely about writing good unit tests.

Comment by Andrew on February 9, 2011

I’m going to pin this on my wall to remind me to cull my unit tests. (But if I add these asserts here, the test gets *even more powerful*… use your anger, Luke….).

Comment by Chris on February 9, 2011

It all depends on your definition of unit. To match the tone of the blog post this should probably be a Socratic dialogue, but my Ancient Greek is rusty.

I kind of agree with Alastair, though I don’t have Alastair’s unit testing dogma cajones.

Sheesh, with definitions like these…

I have a futule hope that one day the term unit testing will go away.

The practice of deep white-box testing that is often described as unit testing never seems productive in systems that experience more than a modest amount of change. If you’re doing continuous integration you need to build tests that minimise maintenance costs, maximise regression detection, and minimise test execution time. Usually what makes sense in the circumstances is (as Alastair describes) to test only at function interfaces against requirements – sometimes known as functional testing. Mocks are one way of enabling isolation of functional tests. I wouldn’t say mocking has nothing to do with contemporary unit testing.

If you’re building a flight control computer for a deep space probe, I suspect you will want to immerse your code in a layer of introspective white-box tests. If you’re formalizing a trading algorithm that was given to you on the back of a beer-mat you’re probably best to keep your tests at the Spec or BDD level.

Comment by Alastair on February 9, 2011

Sorry Chris maybe I should have been clearer. In the context of writing unit tests for a piece of code, “requirements” does not refer to the system as a whole, it refers to the requirements for the code under test. So it’s relatively easy to think up test cases for a class X by just asking yourself “what should an X do?”. In the example above it just happened that the requirements for the Progress Tracker were also requirements for the system as a whole, but I didn’t mean to imply that all unit test cases should be derived from user-level requirements.

This also means that in general you shouldn’t break encapsulation to perform unit tests. If the test case can’t provoke any change in externally-visible behaviour, then why test it at all? (OK there are performance and other non-functional tests which warrant breaking this rule here but you see what I mean)

This is why I dislike the terms black-box and white-box. I might test class X using only its public interface (black-box) and yet X is one component buried deeply within a larger system (white-box). Depends on your perspective.

I look forward to the day when I get my specs on the back of a beer mat.

Comment by AndrewD on February 9, 2011

I’m with Alastair on this.

Test the behaviors, not the implementations.

Screw the IEEE definition.

Comment by Julian on February 9, 2011

Sunny,

Here is the key line in your code that doesn’t match the original implementation:

The implication here is that a ProgressBar instance takes as a parameter the progress tracker instance that it is tracking. I am not arguing that this is a bad idea, and I have often written code that does this. However, it is not how I implemented it the first time, in this case.

When I say “Rewrite it to be parameterised by Progress Layer, so you can substitute a mock sub-layer,” I am saying that the code could be fixed up to be in exactly the style you have described. But it isn’t there now, and there is a cost to change it to put it in that style.

What style is it now? The file

FileTransferWithProgressBardirectly imports and instantiatesFileTransferWithMonopolarProgressEstimateswhich directly imports and instantiatesFileTransfer. Okay, they aren’t the real filenames, but you get the idea, hopefully.Compared to the original implementation, your description has the advantage of testability, at a tiny cost of performance and a small cost of code length and readability.

Comment by Julian on February 9, 2011

I don’t want to get caught up in the “Exactly how small is a unit?” discussion – I have fought in those trenches before. I am using the exact same set of test-tools to do unit-tests and functional tests, so I believe I can side-step that discussion, and blur between the two.

In the dialogue above, you may notice one point where I neatly stepped around the fact that I did NOT do functional testing to ensure that the Progress Bar layer worked with the Monopolar implementation, arguing the interface was unit-tested sufficiently.

Comment by Julian on February 10, 2011

Alastair,

I am seeing a contradiction, which suggests I haven’t understood.

Yes, I understand the desire for functional testing against the requirements.

At one level, the architectural specs just say “Component shall give an estimate between 0 and 100% that is monotonically increasing.”

And, yes, I can write some tests against that.

But at another level, the design specs say “Component will give an estimate based on the Quadrilateral Wavelet algorithm (Huygens, Knuth, et al. 1974).”

So, now, I can write some tests against that, confirming that the estimate should be exactly 43.0% after 15 messages and 35 seconds.

There is a lot of maths in the Quadrilateral Wavelet implementation, and it needs a lot of careful checking, even though I am aware there are other implementations possible.

This isn’t over-testing, is it?

So far, I haven’t broken encapsulation. It gets tricky because I have coded it in Python. With no compiler to pick up my type errors, suddenly getting code-coverage becomes very important as well – which means adding tests based on the structure of the code rather than the specs. Is this over-testing? It certainly adds fragility. [Edited to add: The test itself may not break encapsulation and may continue to pass even if the component is refactored, but the choice of test may have been selected to ensure a certain original code path was executed.]

But lets put that aside. Even without it, I don’t think this addresses my problem. I am using tested lower layers to drive the test cases of the top-level components, rather than mock objects or stubs. When there are changes to the lower layers, I need to spent a lot of time rewriting my test-cases – just to continue to functionally test the top layer of the code, which has had no change to it or its interfaces.

I (and my internal Unit Test Advocate) think Sunny was right to point the finger at the lack of mocking as being a problem. I am having trouble justifying the cost of getting there from here just to re-test that unchanged code I already trust. With hindsight, it should have been designed that way to begin with, but assuming every single component needs to accept mock objects along every single interface seems overkill… and it makes the

main()function rather cluttered with many design decisions being delegated upwards.Comment by Alastair on February 10, 2011

Yes, it is.

The best thing to do here is to design it on the understanding that alternative implementations of the algorithm are possible. As a programmer, you should be skilled in the art of working out which requirements are invariants and which are subject to change in the future. Good software design takes this into account. Hence with the Quadrilateral Wavelet algorithm, you should probably implement it separately from the rest of the Progress Tracker code. This, and the appropriate use of mocks, should avoid overtesting.

Consider a ProgressTracker class which implements the generic functionality, but defers to an algorithm for generating the estimates. The ProgressTracker would be event-based so that it would listen to events from the I/O layer, and produce events to indicate progress. There is not necessarily a 1:1 correspondence between the two, as you may want to update the estimate if some time passes without seeing some I/O. The estimate algorithm could be a simple function which is passed in on construction.

With this design I would definitely be looking to mock out the estimate algorithm in order to test the ProgressTracker. Provide it a “dumb” function with known and simple behaviour in order to test the ProgressTracker. It could even be as simple as counting down from 10 to 0 minutes each time it is called. The point is that it satisfies the requirements of an estimate algorithm, but no more. Because time is an important input to the ProgressTracker I would mock out the clock too. Then all your ProgressTracker test cases need to do is fire simulated IO events at simulated times, and check that it produces some corresponding progress events.

So then you can test your Quadrilateral Wavelet function in isolation. Here you don’t need to mock anything, just call the function directly with various known inputs (15 messages, 35 seconds) and check that it produces the required output (43.0%). When you replace this algorithm with the Monopolar Superpositioning algorithm, you shouldn’t have to change any existing test cases, just write new ones for the new algorithm (possibly based on the existing test cases because they should be pretty similar).

There are various ways of mocking out components, just as there are various ways of connecting them. In the above example I’ve tried to avoid having the ProgressTracker to know anything about the lower layers through the use of events. There are other options of course.

Comment by Julian on February 10, 2011

Alastair,

We – or at least you, and my internal Unit Test Advocate (Now IMPROVED with 100% hindsight!) – are getting closer and closer.

First, I agree that the replacement of the estimation algorithm was predictable, and indeed, it was predicted. Its replacement in the live code was relatively painless. Where I got caught was that I used it in the mocking for the next layer up, which gave me a maintenance problem.

Second, you advocate testing the algorithm separately from the layer. I agree in principle. I can point to other parts of my code where I have done something similar. However, in this hypothetical scenario, and the real world scenario it is based on, the layer is a very thin wrapper around the algorithm. Testing the algorithm separately from the rest of the layer wouldn’t buy much.

Testing the Progress Tracker layer separately from the layer below? That does makes sense. I would need to mock the file transfer layer to do that. When I went to do that, I thought I had a perfectly adequate mock layer written already – the live code for the file transfer layer sitting on a mocked I/O subsystem – I could use that to simulate all of the scenarios I needed. At this layer, that didn’t turn out to be a bad call. Carrying that same logic through to the next layer up has bitten me.

That is certainly where I point the finger – I need a decent mock Progress Tracker to test the Progress Bar. It is also something I am putting in my To Do list, because I can’t justify the time now.

Comment by Sunny Kalsi on February 10, 2011

Hmmm I have a lot of thoughts here, some disjointed.

The first is, the way you’ve implemented the code doesn’t really match the spirit of the architecture diagram. The diagram has each of the components separate, with some air in between them. The implementation is more like one box with a solid line around it, and each of the “layers” with dashed lines between them. That implies both the unit testing difficulty and the cost of changing the implementation. Either re-design the code or re-draw the architecture diagram, or you’ll be tricking yourself.

Secondly, you say:

You can use a factory to return the object, which is a common idiom, then in the tests instruct the factory to use a different object. If you want to use a different Progress Tracker algorithm you’ll need to change it in a convenient spot, and changing it in everything that uses that algorithm may be too onerous.

This is also what dependency injection frameworks are for (in Java there’s Guice and Spring for example). I guess Python may have something similar. This obviates the need for the factories, and mocking frameworks often plug right into . However, their one big disadvantage is that one day you’ll wake up and start to ask yourself if you never construct an object, are you really doing OO programming?

Thirdly, I think Alastair and I are in agreement (at least in his most recent comment). He’s just doing it all manually and I’m saying “use one of the available frameworks”. He’s also changing the architecture slightly in the process, to make it easier to test for your current situation. Another important thing he’s saying is “test the QWF as a function not as an object“. I totally agree, and it took my a while before I figured out that you need to think about testing functions and testing objects as separate things (more on this later).

Fourthly, you need to design your code to be tested. The way you’ll test your code and the way it is written are tightly coupled. Here’s how I think about unit testing in general, and it’s going to take one of my crazy analogies:

You can look at it like painting miniatures. The code is the miniature itself, and the unit test is the process you use to paint it. The way you create your miniature defines how (and whether) you can paint it. For example, if you have a piece which is like a bottle, it’ll be hard to paint inside the bottle. It’s better to design the bottle in halves and then glue them together, purely for the painting.

If you’re painting a component piece a single colour, you can call that “black box testing” because the procedure is guaranteed not to change: “paint it red” for example. If you have a piece with many colours, that’s white box testing, because each time you change the piece you have to change the instructions. If you add a ridge to a square on a piece, you have to write “Paint the square but not in the ridges” instead of just “paint the square”.

So the point is, if you can manufacture your miniature that each “unit” is a single colour (i.e. simple), then you’re good doing only black box testing of it. If it’s many colours (intricate), you have to do white box testing. This simultaneously answers the questions “what is a unit” and “do I do black box testing or white box testing” because both those questions depend on one another.

Whether a unit can be made simple enough to do black box testing of it depends on the design / architecture. I use a combination of both styles depending on how I want to test, and how I want to design.

There’s also a concept I use which I call “trivial code”. This code is trivial in the sense that it has no if statements or loops: You run through it once and you’re guaranteed complete coverage. It should be run in a functional test (if that), and doesn’t need to be unit tested. This is because it will definitely be run and will fail in a big way.

You write “trivial code” and unit test the functions that it calls. This is different from testing the object or interface, and it helps to isolate testing. This is what Alastair is suggesting for how to solve your problem (the progress tracker becomes “trivial code”).

Comment by Julian on February 10, 2011

So, after this discussion, very late last night, I decided to have a closer look at ProgressBar – or at least the much messier real-world equivalent it is based upon – to see what is involved in creating a mock layer beneath it. Maybe it was less work than I thought?

I discovered evidence that I had considered this problem before.

The first was some code in the constructor that looked like this:

self._progress_tracker_cls = MonopolarSuperpositioningProgressTrackerLater, when the progress tracker instance was required, it was constructed like so:

progress_tracker = self._progress_tracker_cls(constructor_params)This code construct allows a test case to construct the ProgressBar, and then pop its own mock Progress Tracker class in there, before it gets created.

It relies on Python’s weak encapsulation: everything is public, but putting an underscore in front is a convention (enforced by style checkers) to say “This isn’t actually part of my public interface.”

Does this violate encapsulation? Yeahno. The comments in the class make it clear it is defining a secret interface for its “friends” (in the C++ sense) to override default behaviour for testing.

This brings the total number of different mechanisms used in my project to slip in a mock object for testing to four. 🙁

However, looking at the code, which is more complex than the fictional Progress Bar would suggest, I see at least three other interfaces to underlying objects that also need to be mocked – including the clock.

In fact, the file has grown moderately large and complicated, and I suspect it could be, with a lot of thought – broken up into two or three other classes.

Which brings me to the other line of code. The first one in the file:

# TO DO: Refactor into smaller parts, that can be tested faster.It seems I don’t just have hindsight, but some foresight as well. To bad I haven’t acted on that, yet.

Comment by Julian on February 10, 2011

Sunny, a quick caveat before I digest all of your message:

The following (buggy) code has no if statements or loops:

permitted_on_premises = patron.is_sober and (patron.age > 18 or patron.is_accompanied_by_adult)It needs to be executed more than once to test it. It needs quite a few test cases before you determine that unaccompanied, sober, 18-year-old patrons are wrongly evicted.

Comment by Julian on February 10, 2011

Your criticism of the architectural diagram is simply reading too much into a ill-defined diagram I threw together as quickly as possible to illustrate a hypothetical architecture in a blog post. My UML diagram drawing tool of choice? PowerPoint (because I don’t own a Visio license).

The biggest disadvantage of dependency injection frameworks is not waking up with weird thoughts about whether you are doing OO. The frameworks have a large cost in complexity and understandability of the code; they are a part of the “too enterprisey” push-back we are hearing from developers. I stand by my decision not to use them by default, even though in this situation, dependency injection would have been justified. Factories have a smaller cost, but it is not trivial. I use factories on a minority of classes. Oddly enough, I paraphrased some code in a comment above. Originally, it said:

progress_tracker = self._progress_tracker_cls.factory(constructor_params)but I dropped the factory when I put it in the comment, precisely to remove the unnecessary complexity in understanding it.

The more we discuss this, the more you and Alastair are lining up with my internal unit-test advocate, and the more my internal pragmatist programmer is complaining that this is perfect for some cases, but need not be the default template for every class – which I am pretty sure is a strawman.

Comment by Sunny Kalsi on February 10, 2011

Oops. The “trivial code” has literally got to be a “do this, then do this, then do this”. The “this” should be functions which are tested. I was implicitly assuming it had no outputs, nor was it calculating anything for passing into the functions.

As for “too enterprisey” a lot of these frameworks are, but many are coming up that aren’t. In the end you have to ask yourself “Am I a professional or just someone who likes to hack?” You could argue that programming itself is too hard or learning a framework for, say drawing images, is too hard so “I’ll write my own” (which many do, and that’s why there’s so many available), but that’s not how modern software engineering should work. You need to start using modern idioms and they will become clearer over time.

Having said that, the idioms people use in statically typed C-like languages may not be appropriate for a modern dynamically typed language. There may well be something more appropriate for you. If you can find it or develop it then great, otherwise you have to choose between covering code the hard way or praying.

Comment by Aristotle Pagaltzis on February 11, 2011

I’m too late to make any new points (use DI; use mocks; testability does not happen by itself), but let me recommend reading Miško Hevery’s weblog from start to finish.